Research

The biological brain is the only example of general intelligence that we know of. What are the core design principles that give rise to this remarkable ability?

Since the brain came about through a complicated evolutionary process, identifying these principles a priori is challenging.

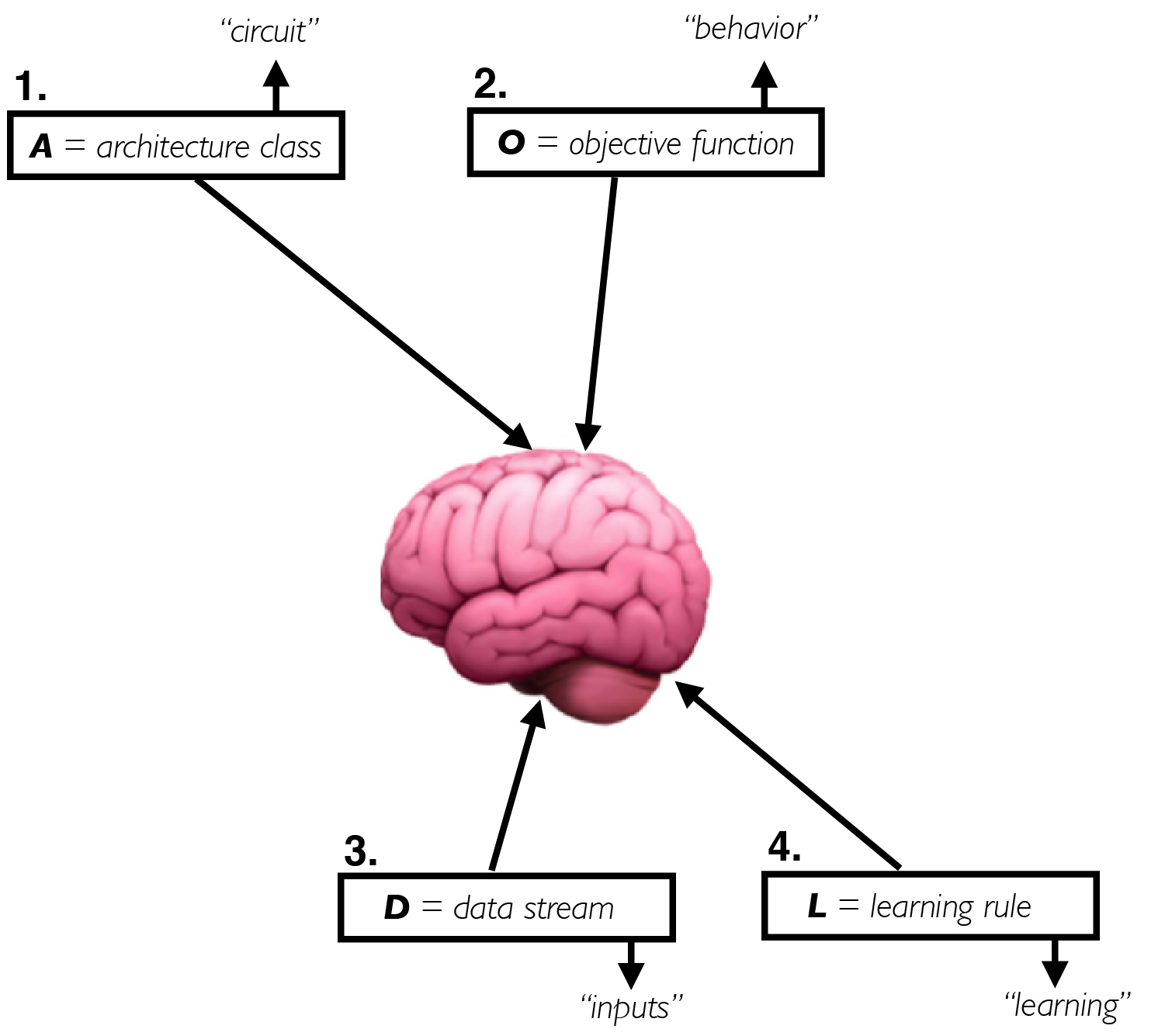

My primary focus therefore is to “reverse engineer” neural circuits towards this end goal, by simulating the evolutionary process via in silico neural network models that toggle four main components:

By comparing the artificial networks that arise from these components to quantitative metrics on high-throughput neural recordings and behavioral data, the long-term aim is to produce better normative accounts for how the brain produces intelligent behavior and build better artificial intelligence algorithms along the way.

My PhD thesis on these topics can be found here, along with a talk recording.

Below are some representative papers that are relevant to the above questions. When possible, I try to link to the freely accessible preprint; however, this may differ from the final published version. For a full publication list, see my CV. See here for presentations on some of this work.

Selected Papers

(*: joint first author; **: joint senior author)

J. Feather*, M. Khosla*, N. A. Ratan Murthy*, A. Nayebi*. Brain-model evaluations need the NeuroAI Turing Test. Preprint, submitted for review.

A. Nayebi. Barriers and pathways to human-AI alignment: a game-theoretic approach. Preprint, submitted for review. [talk recording]

A. Nayebi, R. Rajalingham, M. Jazayeri, G.R. Yang. Neural foundations of mental simulation: future prediction of latent representations on dynamic scenes. Advances in Neural Information Processing Systems (NeurIPS), Volume 36 (2023): 70548-70561. (Selected for spotlight presentation) [code][NeurIPS 5 min talk recording][MIT CBMM talk recording][MIT News article][MIT CBMM short video]

A. Nayebi*, N.C.L. Kong*, C. Zhuang, J.L. Gardner, A.M. Norcia, D.L.K. Yamins. Mouse visual cortex as a limited resource system that self-learns an ecologically-general representation. PLOS Computational Biology, Volume 19 (2023): 1-36. [code][talk recording]

A. Nayebi, J. Sagastuy-Brena, D.M. Bear, K. Kar, J. Kubilius, S. Ganguli, D. Sussillo, J.J. DiCarlo, D.L.K. Yamins. Recurrent connections in the primate ventral visual stream mediate a tradeoff between task performance and network size during core object recognition. Neural Computation, Volume 34 (2022): 1652-1675. [code]

A. Nayebi, A. Attinger, M.G. Campbell, K. Hardcastle, I.I.C. Low, C.S. Mallory, G.C. Mel, B. Sorscher, A.H. Williams, S. Ganguli, L.M. Giocomo, D.L.K. Yamins. Explaining heterogeneity in medial entorhinal cortex with task-driven neural networks. Advances in Neural Information Processing Systems (NeurIPS), Volume 34 (2021). (Selected for spotlight presentation) [code][talk recording]

C. Zhuang, S. Yan, A. Nayebi, M. Schrimpf, M.C. Frank, J.J. DiCarlo, D.L.K. Yamins. Unsupervised neural network models of the ventral visual stream. Proceedings of the National Academy of Sciences of the United States of America (PNAS), Volume 118 (2021). [code][talk recording]

A. Nayebi*, S. Srivastava*, S. Ganguli, D.L.K. Yamins. Identifying learning rules from neural network observables. Advances in Neural Information Processing Systems (NeurIPS), Volume 33 (2020). (Selected for spotlight presentation) [code][talk recording][blogpost][The Economist news article]

D. Kunin*, A. Nayebi*, J. Sagastuy-Brena*, S. Ganguli, J. Bloom, D.L.K. Yamins. Two routes to scalable credit assignment without weight symmetry. Proceedings of the 37th International Conference on Machine Learning (ICML), PMLR 119 (2020):5511-5521. [code][talk recording]

J. Kubilius*, M. Schrimpf*, K. Kar, R. Rajalingham, H. Hong, N.J. Majaj, E.B. Issa, P. Bashivan, J. Prescott-Roy, K. Schmidt, A. Nayebi, D.M. Bear, D.L.K. Yamins, J.J. DiCarlo. Brain-like object recognition with high-performing shallow recurrent ANNs. Advances in Neural Information Processing Systems (NeurIPS), Volume 32 (2019): 12805-12816. (Selected for oral presentation) [code][talk recording]

H. Tanaka, A. Nayebi, N. Maheswaranathan, L.T. McIntosh, S.A. Baccus, S. Ganguli. From deep learning to mechanistic understanding in neuroscience: the structure of retinal prediction. Advances in Neural Information Processing Systems (NeurIPS), Volume 32 (2019): 8537-8547.–>

A. Nayebi*, D.M. Bear*, J. Kubilius*, K. Kar, S. Ganguli, D. Sussillo, J.J. DiCarlo, D.L.K. Yamins. Task-driven convolutional recurrent models of the visual system. Advances in Neural Information Processing Systems (NeurIPS), Volume 31 (2018): 5290-5301. [code][blogpost]

L.T. McIntosh*, N. Maheswaranathan*, A. Nayebi, S. Ganguli, S.A. Baccus. Deep learning models of the retinal response to natural scenes. Advances in Neural Information Processing Systems (NIPS), Volume 29 (2016): 1369-1377. [code]